Featured Projects

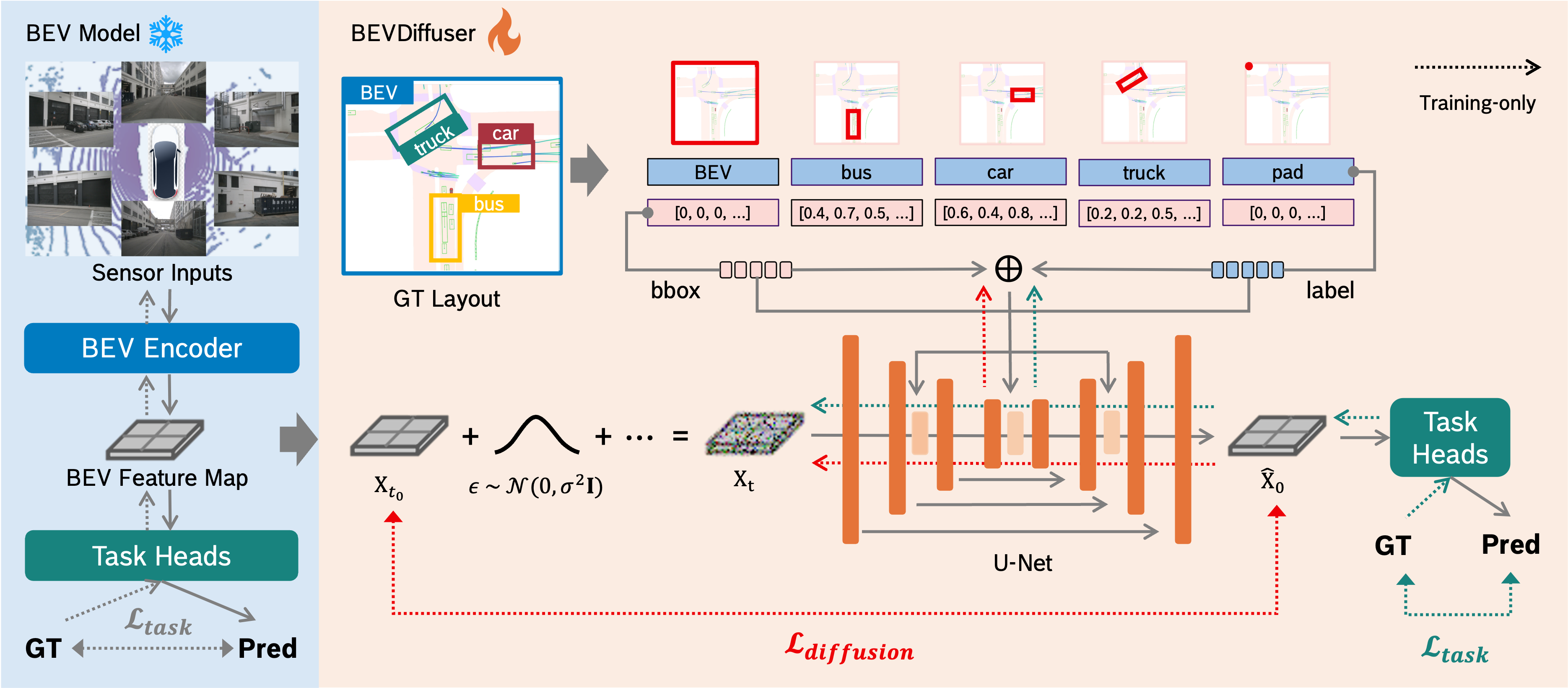

BEVDiffuser: Plug-and-Play Diffusion Models

BEVDiffuser is a novel diffusion model that effectively denoises BEV feature maps using the ground-truth object layout as guidance. BEVDiffuser can be operated in a plug-and-play manner during training time to enhance existing BEV models during without modifying architectures or adding computational overhead at inference. CVPR 2025 (Highlight).

BEVDiffuser is a novel diffusion model that effectively denoises BEV feature maps using the ground-truth object layout as guidance. BEVDiffuser can be operated in a plug-and-play manner during training time to enhance existing BEV models during without modifying architectures or adding computational overhead at inference. CVPR 2025 (Highlight).

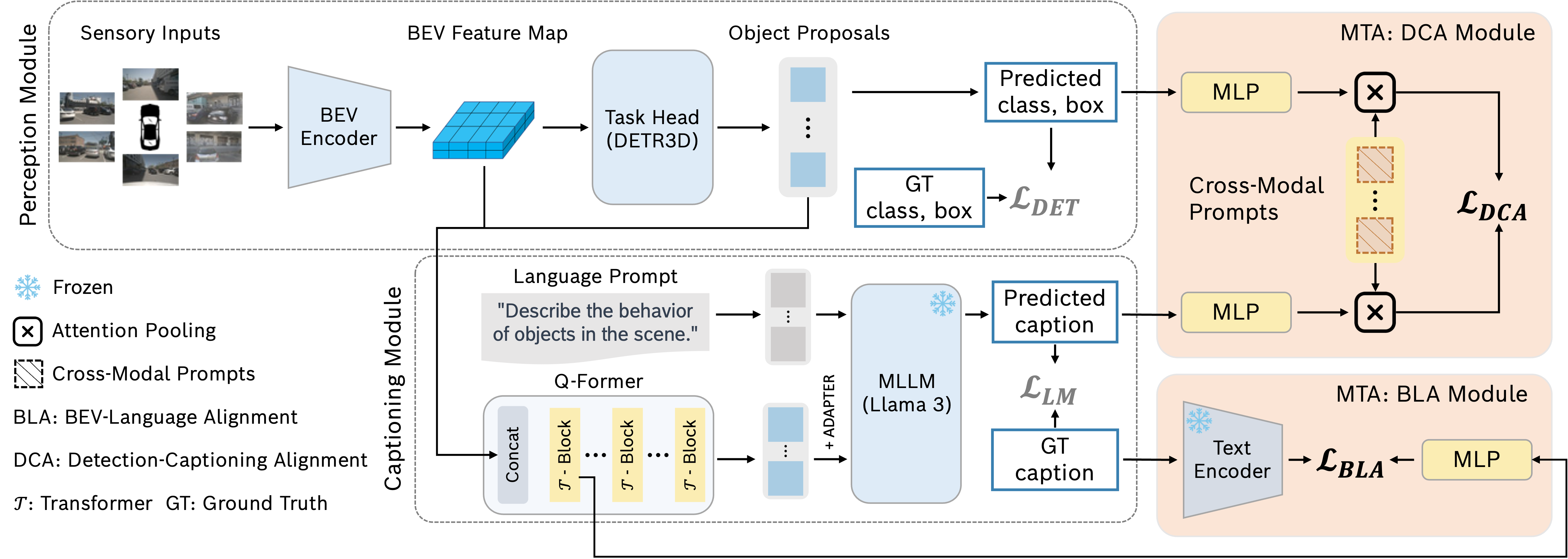

MTA: Multimodal Task Alignment

MTA is a novel multimodal task alignment framework that boosts BEV perception and captioning. MTA enforces alignment through multimodal contextual learning and cross-modal prompting mechanisms. Arxiv.

MTA is a novel multimodal task alignment framework that boosts BEV perception and captioning. MTA enforces alignment through multimodal contextual learning and cross-modal prompting mechanisms. Arxiv.

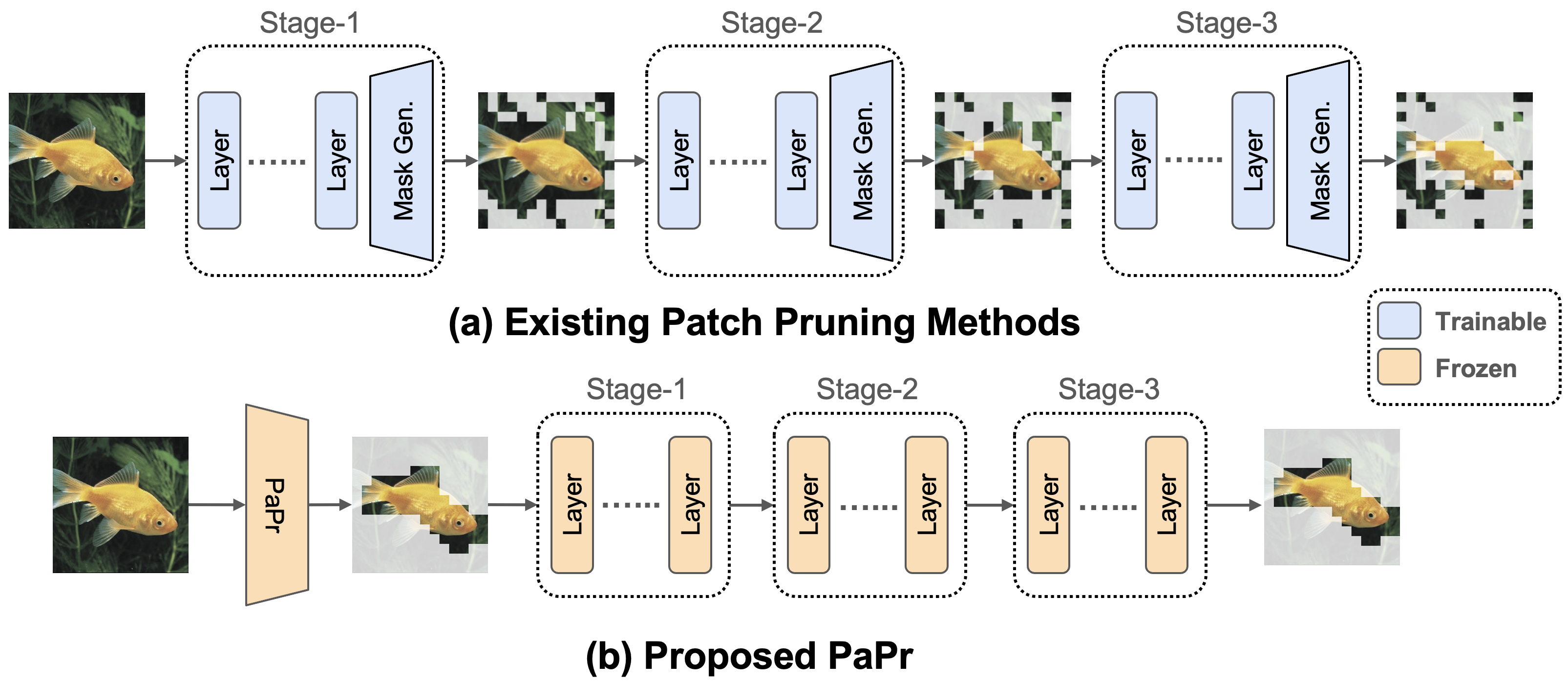

PaPr: Patch Pruning for Faster Inference

PaPr is a novel background patch pruning method that can seamlessly operate with ViTs for faster inference (>2x). PaPr is a training-free approach and can be easily plugged into existing token pruning methods for further efficiency. ECCV 2024.

PaPr is a novel background patch pruning method that can seamlessly operate with ViTs for faster inference (>2x). PaPr is a training-free approach and can be easily plugged into existing token pruning methods for further efficiency. ECCV 2024.

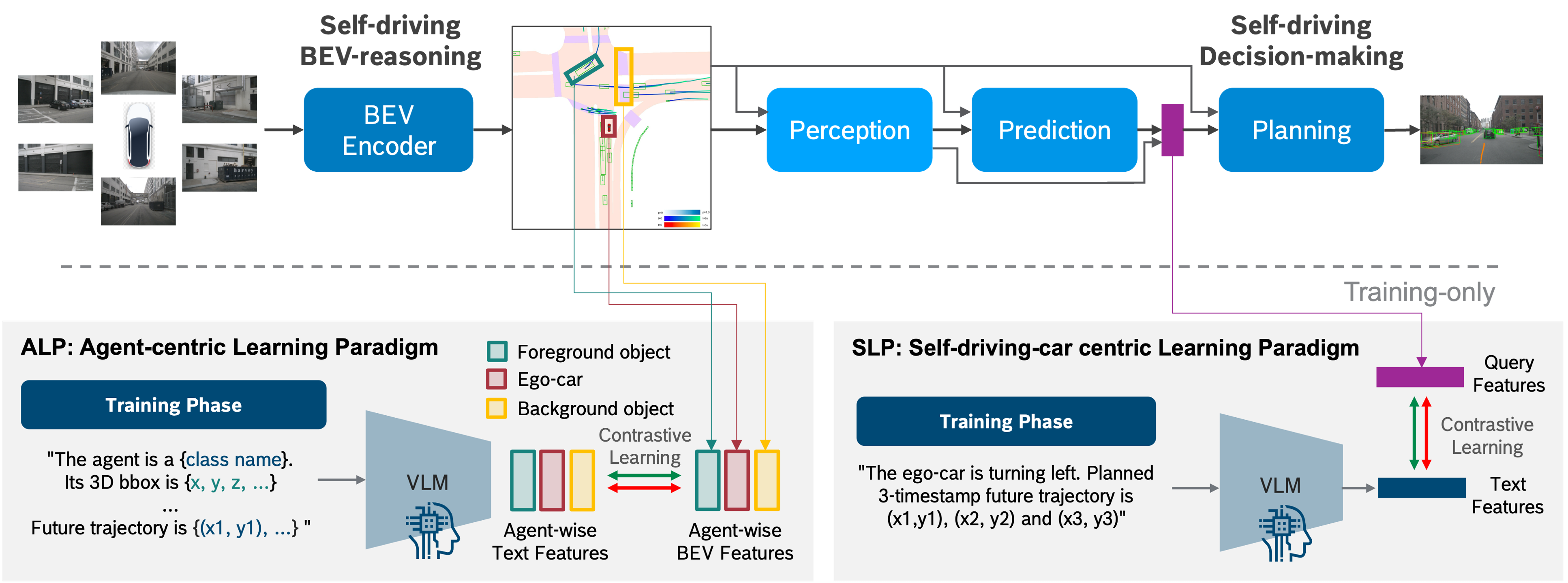

VLP: Vision Language Planning

VLP is a vision language planning approach for enhancing end-to-end autonomous driving. VLP is a training-only approach that distills the power of LLMs into the existent autonomous driving stacks for improved performance. CVPR 2024.

VLP is a vision language planning approach for enhancing end-to-end autonomous driving. VLP is a training-only approach that distills the power of LLMs into the existent autonomous driving stacks for improved performance. CVPR 2024.

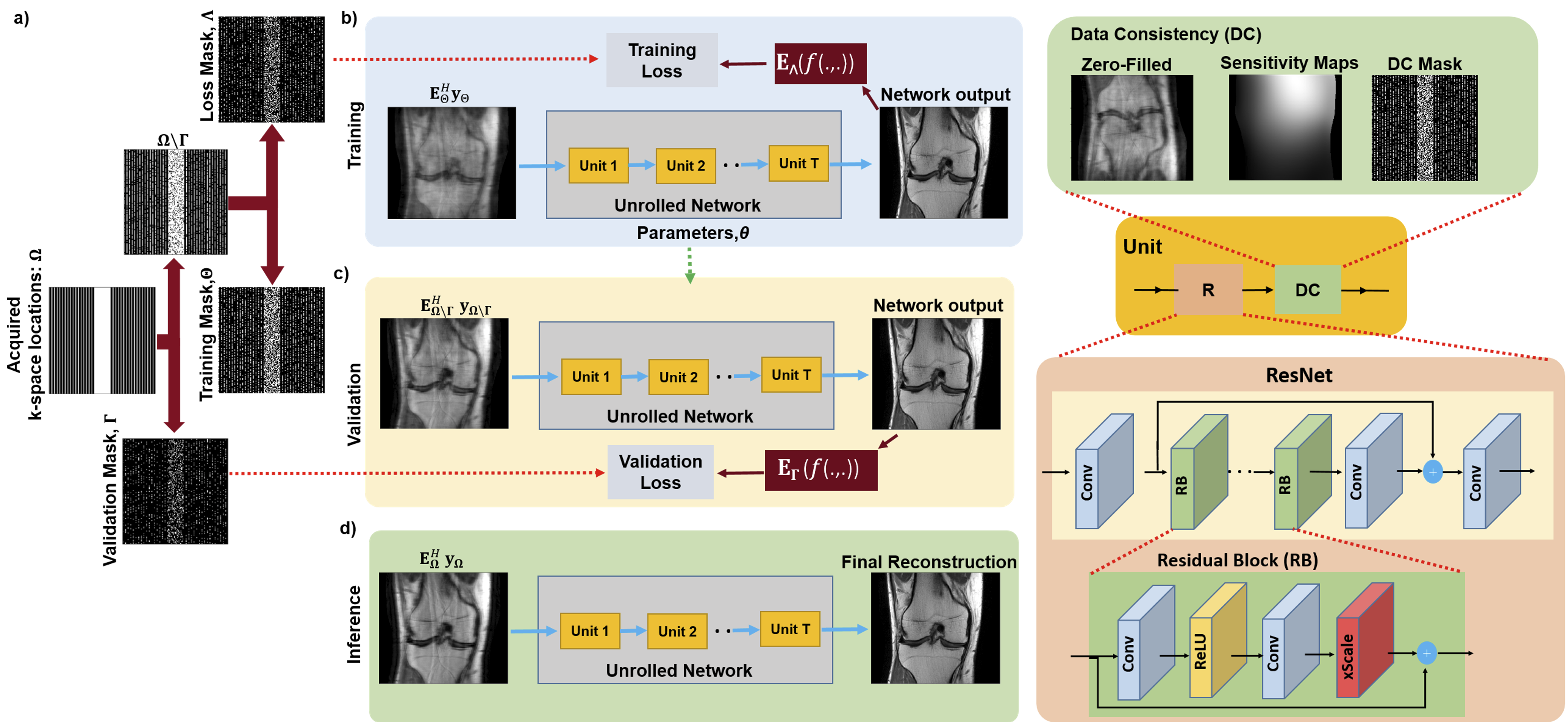

ZS-SSL: Zero-Shot Self-Supervised Learning

Developed the zero-shot self-supervised learning (ZS-SSL) methodology that performs test time training with a well-defined stopping criterion and tackles out-of-distribution challenges via domain adaptation. ICLR 2022.

Developed the zero-shot self-supervised learning (ZS-SSL) methodology that performs test time training with a well-defined stopping criterion and tackles out-of-distribution challenges via domain adaptation. ICLR 2022.

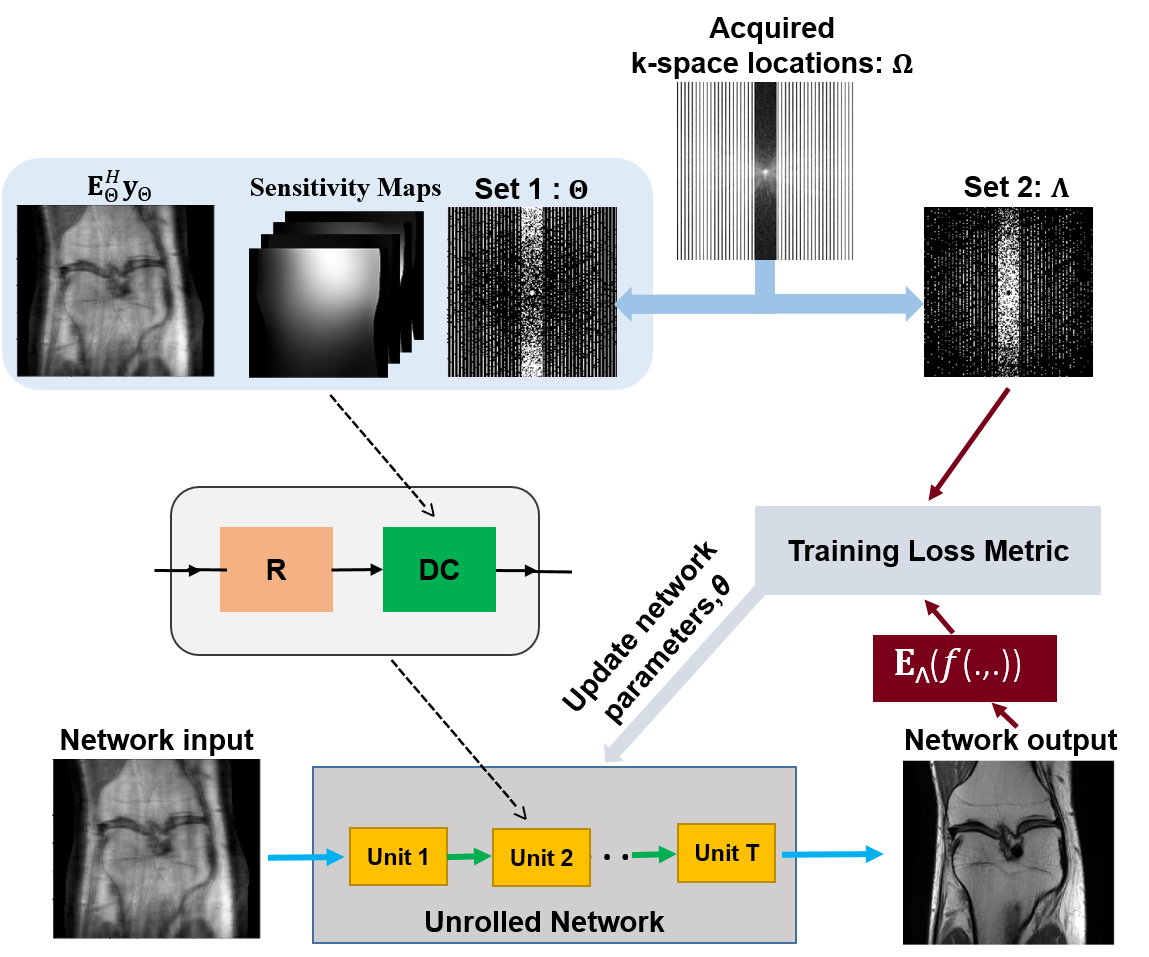

SSDU: Self-Supervision via Data Undersampling

SSDU is the pioneering self-supervised learning work that enables reconstruction without ground-truth data. SSDU splits acquired measurements into two disjoint sets, in which one set is used as input to the network, and the other is used to define the loss function. This work received the 2020 IEEE ISBI Best Paper Award.

SSDU is the pioneering self-supervised learning work that enables reconstruction without ground-truth data. SSDU splits acquired measurements into two disjoint sets, in which one set is used as input to the network, and the other is used to define the loss function. This work received the 2020 IEEE ISBI Best Paper Award.